Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:00

You've probably heard about artificial intelligence and

0:02

chat GPT, but do you know the

0:04

person in charge? On our podcast, Good

0:06

Bad Billionaire, we tell the stories of how

0:08

the world's billionaires made their money. We're

0:11

telling the story of Sam Altman, the boss

0:13

of OpenAI who make chat GPT. He became

0:15

a billionaire this year, but his wealth has

0:17

nothing to do with artificial intelligence. He

0:20

actually got rich investing in other tech startups.

0:22

Listen to Good Bad Billionaire to learn how

0:24

he did it and whether he's good or

0:26

bad. That's Good Bad Billionaire wherever you

0:28

get your BBC podcasts. Welcome

0:41

to How to Fail, the podcast

0:43

that treats all failure as necessary

0:45

data acquisition about what to do

0:47

better next time, because that way

0:49

we can be more truly successful.

0:52

And would you like access to

0:54

some extra special content? Course

0:57

you would. You can join me for

0:59

more How to Fail in our subscriber

1:01

series, Failing with Friends. Every

1:03

week, my guest and I answer your

1:05

questions and play agony aunt and uncle

1:07

to your failures. You'd be

1:10

surprised how helpful and therapeutic these

1:12

mini-sessions are. This week, you'll

1:14

hear more from Yuval. Then

1:16

no matter how urgent it seems,

1:19

slowing down is usually the best thing

1:22

to do. And we'd love

1:24

to hear from you. Follow the link in the

1:26

podcast notes. And you

1:28

can listen to How to Fail on Amazon

1:30

Music or just ask Alexa, play How to

1:32

Fail with Elizabeth Day on Amazon Music. My

1:43

guest today is one of the great

1:45

thinkers of our age. Yuval

1:48

Noah Harari is an historian, philosopher,

1:50

and a best-selling author of some

1:52

of the most influential books of

1:54

the last half century. Sapiens,

1:57

A Brief History of Humankind,

2:00

It sells our story from the Stone

2:02

Age up until the political and

2:04

technological revolutions of the 21st century.

2:07

It has sold over 25 million

2:09

copies, been translated into 45 languages,

2:13

and spent 96 weeks in the New

2:15

York Times bestseller charts. His

2:17

other works, which examine the nature

2:19

of consciousness, free will, and whether

2:21

humanity has a future, include

2:24

Homo Deus, A Brief History of

2:26

Tomorrow, and 21 Lessons for the

2:28

21st Century.

2:30

His latest, Nexus, takes

2:32

on AI, asking

2:34

us to consider the complex relationship

2:36

between information and truth, and

2:39

raising the urgent choices we face

2:41

today as non-human intelligence

2:43

threatens our very existence.

2:46

Born and raised in Israel, Harari taught

2:49

himself to read at the age of

2:51

three. At university,

2:53

he specialised in medieval

2:55

history. This

2:57

is the best reason to learn history,

2:59

he later reflected, not to

3:02

predict the future, but to free

3:04

yourself from the past and

3:06

imagine alternative destinies. Yuval

3:09

Noah Harari, welcome to How to Fail. Thank

3:12

you. Thank you for inviting me. You

3:14

are famed for coining the

3:17

term the cognitive revolution as

3:19

it pertains to Homo sapiens,

3:21

that idea that we are our

3:24

stories. This is our capability alone.

3:27

But I wanted to know what story you tell

3:29

about yourself. If someone were

3:31

to ask you, who are you, Yuval? What

3:33

would you say? That's

3:35

a difficult question. Professionally,

3:38

I define myself as a historian. I

3:41

do talk about AI and things like that

3:43

because I think history is not the study

3:45

of the past, it's the

3:47

study of change, of how things change, and

3:50

this applies to the present as well as

3:53

to the future. Personally, it's

3:55

a much more difficult question. I'm not sure.

3:57

I mean, there is no single answer. I

4:01

think that I keep changing also. So

4:04

the answers I give to who I am also keep

4:06

changing. That child at

4:08

the age of three who taught himself to read,

4:11

what was he like? Very

4:13

inquisitive, very

4:15

sensitive, really

4:17

on a physical level. For

4:19

instance, it took me something like an

4:23

hour or two hours to be able to

4:25

open my eyes in the morning. You're

4:27

not sure still what it was, but when

4:29

I would wake up, my eyes

4:31

would just burn so much from any,

4:34

like even the slightest amount of light

4:36

that I had to keep them shut. Yeah,

4:39

very, very sensitive to the world. Did

4:41

you feel that the world was safe

4:43

when you were three? No.

4:46

No. I mean, I would keep... No, not

4:48

at age three, but later on I would

4:50

keep asking the adults about the

4:53

big questions that many kids ask about

4:55

the meaning of life and why we

4:57

are here and what happens after we

4:59

die. And what really

5:01

shocked me was not that the adults

5:03

didn't have good answers, it's

5:06

that they didn't seem very concerned about

5:08

the fact that they don't have answers.

5:11

They would be very concerned about other things,

5:14

like be it the latest political scandal

5:16

or my grades in school or whatever,

5:18

this was important. But the fact they

5:20

don't know the meaning of life, nobody cares about

5:22

it. And

5:24

do you think that's a human defense mechanism? Yes,

5:27

absolutely. Basically, I think that

5:30

all the fears in the world

5:32

are just a kind of camouflage

5:34

or masquerade to this one big

5:36

fear that usually we just

5:39

can't face, that we feel

5:41

that, okay, I can handle this, but

5:44

not the full scale

5:46

problem. I love

5:48

that image of the young Yuval not opening

5:51

his eyes because it was too much. And

5:54

I have read about your

5:56

information diet and I wonder

5:59

if it was partly that. You just couldn't,

6:01

you knew at some level that you

6:03

had to pace what you took in

6:05

because you felt and you thought so much.

6:08

Tell us about your information diet. It's

6:11

not mine. It's basically the

6:13

idea that people are

6:15

extremely mindful, many people are very mindful

6:17

about what they eat, what

6:19

food they give to their body. And

6:22

we should be equally mindful about

6:24

the food that we feed our

6:26

minds. And here

6:28

we tend to be much more careless. First

6:31

of all, feeding our minds far too much. Like

6:34

food, we need food, but too much of it is

6:36

not good for us. It's the same as information. The

6:39

idea that more

6:41

information is always good for us, this is

6:43

nonsense. We need

6:45

some kind of moderation there too. And

6:48

similarly, the same way that it's not good for

6:50

our body to stuff in too much

6:52

junk food, so it's not good for

6:54

the mind to stuff in too much

6:56

junk information. And

7:01

the same way that you have in

7:03

all the kind of chips and sweet

7:05

things, these warnings that this contains 40%

7:08

fat and 10% sugar or whatever. So

7:12

we maybe need some labels on the

7:14

information we consume. Like you watch some

7:16

video on TikTok or whatever, and it

7:19

should come with a warning, with a

7:21

label. It also contains 40% greed, 20% rage. If

7:26

you want to feed your mind with this,

7:28

okay, it's a free country, but just be

7:30

aware that this is what you're putting

7:33

in. That's such a great idea.

7:36

And how do you use your smartphone? Because I think you

7:38

have a smartphone, but you don't use it all the time.

7:41

Yeah, I try to use it instead of letting

7:43

it use me. And to

7:45

minimize its usage as much as possible.

7:47

It's more like an emergency smartphone that

7:49

when I travel like here now, so

7:51

I need a method

7:54

to get in touch. And increasingly, there

7:56

are many services that you just can't

7:58

get unless you use it. This

23:20

episode is brought to you by Shopify. Forget

23:24

the frustration of picking commerce platforms

23:26

when you switch your business to

23:28

Shopify. The global commerce platform that

23:30

supercharges your selling wherever you sell.

23:32

With Shopify, you'll harness the same

23:34

intuitive features, trusted apps, and powerful

23:37

analytics used by the world's leading

23:39

brands. Sign up today for your

23:41

$1 per month trial

23:43

period at shopify.com/tech, all lower

23:46

case. That's shopify.com

23:48

slash tech.

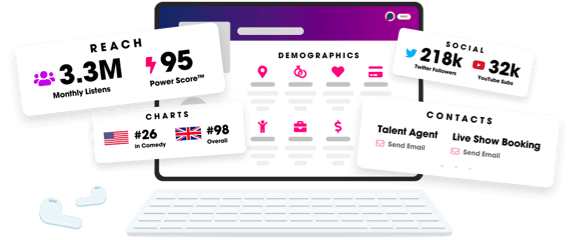

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us